Free Courses Sale ends Soon, Get It Now

Free Courses Sale ends Soon, Get It Now

Disclaimer: Copyright infringement is not intended.

Context

Need for AI regulations

Approaches of different countries to regulate AI

European Union

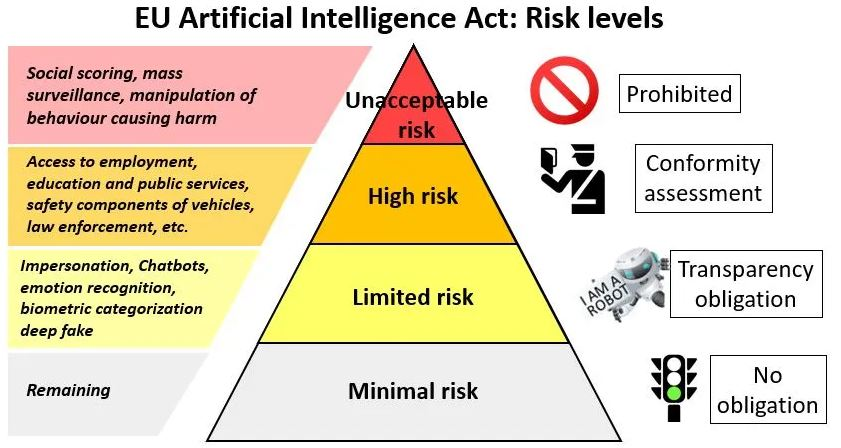

The EU AI Act takes a horizontal approach, which means it applies to all areas in which AI is utilized. It categorizes AI systems into four risk categories, each with related regulatory requirements:

Chinese approach

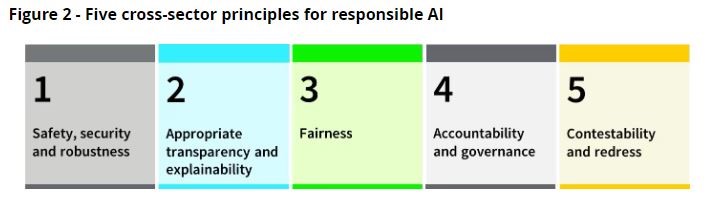

Chinese rules seek to balance AI with legal governance and are based on five key principles:

UK’s approach to AI

India’s approach to AI

India is developing a comprehensive Digital India Framework that will include provisions for regulating AI. The framework aims to protect digital citizens and ensure the safe and trusted use of AI.

Conclusion

|

PRACTICE QUESTION Q. "What are the different approaches of the countries towards regulating the rapid advancement of AI technologies, and what regulatory measures should be implemented to address these concerns effectively?" Examine. ( 250 Words) |

© 2024 iasgyan. All right reserved