Free Courses Sale ends Soon, Get It Now

Free Courses Sale ends Soon, Get It Now

Copyright infringement not intended

Picture Courtesy: https://www.linkedin.com/pulse/european-union-20-million-euros-develop-on-demand-ai-platform-lopes

Context: The European Parliament recently passed the Artificial Intelligence Act to regulate Artificial Intelligence. This legislation is the first comprehensive framework for governing AI, and it is expected to have a global impact on similar laws in other nations.

Details

Key Provisions of the EU AI Act

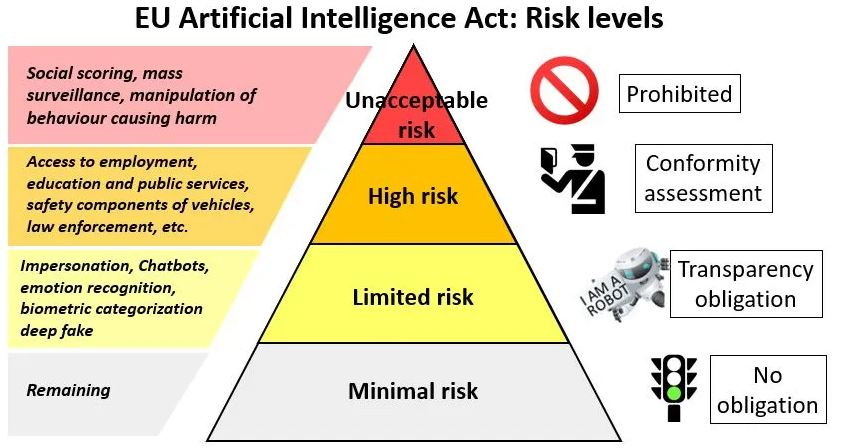

The EU AI Act takes a horizontal approach, which means it applies to all areas in which AI is utilised. It categorises AI systems into four risk categories, each with related regulatory requirements:

Significance

Challenges

|

AI Regulation in India ●The Union Ministry of Electronics and Information Technology (MeitY) is working on an AI framework. ●The MeitY requires platforms in India to get government permission before launching "under-testing/unreliable" AI tools like Large Language Models (LLMs) or Generative AI ●For India, developing a framework for responsible AI regulation is essential. Recent updates demonstrate a willingness to adapt legislation to the changing AI field. India must strike a balance between protecting citizens' rights and encouraging innovation in the AI field. |

Conclusion

Must Read Articles:

European Union’s Artificial Intelligence Act: https://www.iasgyan.in/daily-current-affairs/european-unions-artificial-intelligence-act#:~:text=The%20AI%20Act%20would%20help,threaten%20people%27s%20safety%20and%20rights.&text=By%20guaranteeing%20the%20safety%20and,of%20AI%20in%20the%20EU.

|

PRACTICE QUESTION Q. A facial recognition system used for airport security flags a passenger as a potential security threat. However, upon investigation, it is discovered that the flag was triggered due to a bias in the training data – the system disproportionately identified people of a specific ethnicity as threats. In this scenario, which of the following actions would be MOST important to address the ethical concerns of AI bias? A) Immediately remove the flagged passenger and increase security measures. B) Fine-tune the facial recognition system with more balanced training data. C) Develop a human oversight system to review all flagged individuals. D) Alert the public about the limitations of the facial recognition system. Answer: B Explanation: While immediate security measures may be necessary (eliminating A), a long-term solution requires addressing the bias. Option B tackles the root cause. Option C is a good secondary measure, but doesn't address the bias itself (eliminating C). Raising public awareness (D) is valuable, but doesn't directly address the bias (eliminating D). |

© 2024 iasgyan. All right reserved