Free Courses Sale ends Soon, Get It Now

Free Courses Sale ends Soon, Get It Now

Source: BusinessStandard

Disclaimer: Copyright infringement not intended.

Context

Details

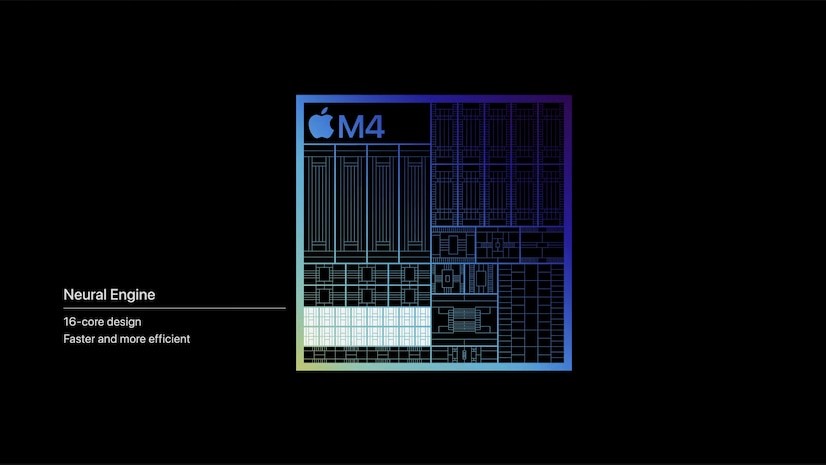

What is NPU (Neural Processing Unit)?

Integration in Consumer Devices:

Role in Data Centers:

Key Features of NPUs:

NPU Applications:

Distinctions between NPUs, CPUs, and GPUs

CPU (Central Processing Unit):

GPU (Graphics Processing Unit):

NPU (Neural Processing Unit):

Key Differences:

On-Device AI and the Role of NPUs

Sources:

|

PRACTICE QUESTION Q. While CPUs, GPUs, and NPUs all contribute to computing capabilities, NPUs stand out for their specialization in accelerating AI workloads, offering superior efficiency and performance for tasks like deep learning inference. Comment. (250 words) |

© 2024 iasgyan. All right reserved